Photo by NEOM Unsplash

Photo by NEOM Unsplash

Table of Contents

Open Table of Contents

Transactions

Transactions are Unit of Work executed in the database.

Transactions may be used when actions that need to be rolled back in the case of possible failure.

In writing to multiple different tables, deleting, and operating on them transactions are helpful. (For example, we are writing to the

table-1 then writing to the table-2 but while we are writing to the table-2 an error is occurring, if this is

a single unit of work we would like to undo/rollback our writes to table-1)

We want atomicity. Statements in the transaction should succeed or fail as a whole.

Commit makes the transaction permanent, and Rollback cancels the transaction.

Example transaction: ‘A’ sends money to ‘B’

START TRANSACTION

- Withdraw 100$ from A

- Deposit 100$ to B

COMMITShould I use transactions for a single query?

It depends if we want to roll back the query. But in most scenarios, we don’t need to use transactions for a single query.

Transactions should be ACID.

Atomicity

- Transactions may include multiple statements (e.g. a set of SQL queries) but transactions are expressed as a unit and transactions can’t minimize even more.

- Transactions can only succeed or fail. If any of the statements in the transaction fails then the whole transaction will fail.

Consistency

- Transactions should be consistent across database constraints.

- Transactions change the state of the database from one to another.

- If the data comes to an illegal state, the transaction should result in an error. (e.g. we are trying to set negative numbers to the positive field.)

Isolation

- Two or more transactions should not affect each other.

- Multiple transactions should run in isolation from each other.

- The result of the transaction should be not visible to other transactions if the transaction is not committed changes.

- Reads and writes in the database should not affect other reads and writes in the same database.

- To increase performance transactions are executed concurrently but concurrency may cause isolation problems.

- We will explain isolation in detail in the section Concurrency Control in DBMS…

Durability

- Transactions should be permanent when they are committed. (it should remain committed even in the case of a power/system failure.)

- After the transaction is committed, changes should not be lost. To prevent data loss, the copy of the database can be kept in different locations also dbms uses non-volatile memory for avoiding data loss.

Transactions in SQL

In the SQL, We can use the statements below to control the transaction:

COMMIT: To save changes.ROLLBACK: To revert changes.SAVEPOINT: To create rollback points in transaction groups.SET TRANSACTION: To set transaction name/properties.

Basic Example

START TRANSACTION;

INSERT INTO users (name, email) VALUES ('John Doe', 'johndoe@example.com');

UPDATE accounts SET balance = SUM(balance) WHERE name = 'John Doe';

COMMIT;Example with Try-Catch

START TRANSACTION;

DECLARE EXIT HANDLER FOR SQLEXCEPTION

START TRANSACTION

ROLLBACK;

RESIGNAL;

END;

UPDATE accounts SET balance = 5000 WHERE user_id = 1;

UPDATE accounts SET balance = 1000 WHERE user_id = 2;

IF (SELECT balance FROM accounts WHERE user_id = 1) < 0 THEN

SIGNAL SQLSTATE '45000' SET MESSAGE_TEXT = 'Insufficient balance';

END IF;

COMMIT;- The

DECLARE EXIT HANDLER FOR SQLEXCEPTIONblock is used to catch any SQL exceptions that occur within the transaction. - The

RESIGNALstatement raises the exception again so that it can be handled by an outertry-catchblock if any. - The

SIGNALstatement raises an exception with message ‘Insufficient balance’

With SAVEPOINT

BEGIN;

INSERT INTO table1 VALUES (1);

SAVEPOINT my_savepoint;

INSERT INTO table1 VALUES (2);

ROLLBACK TO SAVEPOINT my_savepoint;

INSERT INTO table1 VALUES (3);

COMMIT;- In the example above, 1 and 2 are inserted but not 3.

Concurrency Control in DMBS

Photo by Liam Briese Unsplash

Photo by Liam Briese Unsplash

Executing transactions in the database sequentially (serial schedule) is not

efficient and execution of the next transaction may be delayed. But executing

sequentially ensures data consistency and in the sequential execution there are no concurrency issues.

To increase performance, Transactions are executed concurrently(concurrent schedule). But

in the concurrent execution, we may encounter concurrency problems. For preventing concurrency problems, databases provide Concurrency Control Protocols.

Concurrency Control Protocols

There are two types of concurrency control protocols:

- Lock Based Protocols

- Timestamp Based Protocols

Lock Based Protocols

In the lock-based protocols, transactions should acquire the lock for changing or reading data. In the DBMS, two types of locks exist:

1. Shared Lock

Simultaneous reading of the data by multiple transactions. If the transaction is acquired the shared lock, it can’t change the data it can only read the data.

2. Exclusive Lock

It is a lock that is acquired for changing the data. At any given time only one transaction can hold an exclusive lock. If one transaction holds an exclusive lock, other transactions can’t acquire a shared or exclusive lock for the same data.

There are two types of lock-based protocols:

1. Two Phase Locking

It is a widely used technique. It provides a strict ordering for acquiring and releasing the lock. It has two phases:

- Growing Phase: In this phase, the transaction acquires the lock before the modification of the data. After the lock is acquired, it is not released until the transaction finishes.

- Shrinking Phase: The Transaction releases the lock after its operation completes in this phase. The transaction can’t acquire the lock again after the release of the lock.

2.Strict Two Phase Locking

It is similar to two-phase locking. In the Two-Phase Locking transaction can release the lock before it commits but in the strict two-phase locking, it can’t.

Timestamp Based Protocols

There is a timestamp of the transaction when the transaction enters the system. Coordination is handled with timestamps. Transactions are executed in the correct order with the help of timestamps.

In the DBMS, several isolation levels exist and they use concurrency control protocols for preventing data loss.

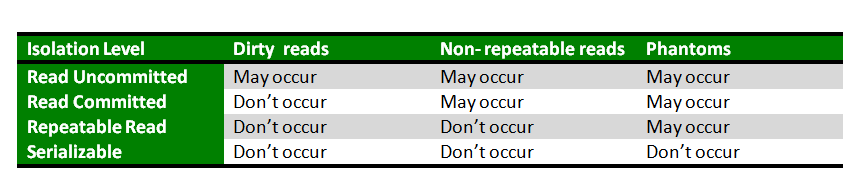

Isolation Levels

Multiple users can access the same data at the same time with lower

isolation levels but lower isolation levels can cause certain concurrency problems.

(e.g. Dirty Read) Conversely, at the higher isolation level, fewer users can access the same at the

same time but it ensures data consistency. Higher isolation levels may increase the block time of the system and other transactions.

It requires more system resources.

Read Uncommitted

- It is the lowest isolation level.

- It is only for ensuring the read of data that is physically correct.

- At this level, transactions are not isolated.

Read Committed

- It is the default isolation level.

- Write locks are held until the transaction finishes, and Read locks are released when the

SELECToperation is done therefore nonrepeatable-read can occur at this level. - Range locks are not managed at this level.

Repeatable Read

- Write and Read locks are required until the transaction finishes.

- Range locks(i.e.

WHERE) are not used so Phantom reads can occur.

Serializable

- It is the highest isolation level.

- Read and Write locks are required until the transaction is done.

- For the

WHEREqueries range locks are required. - Transactions are isolated from each other completely.

- One transaction can block the other one therefore performance may decrease.

A serializable execution is defined to be an execution of the operations of concurrently executing SQL-transactions that produces the same effect as some serial execution of those same SQL-transactions.— Second Informal Review Draft ISO/IEC 9075:1992, Database Language SQL- July 30, 1992

In the MySQL default global transaction level can be specified with: --transaction-isolation=level

Which Isolation level should I use?

In most situations, the default isolation level read committed is sufficient. The lowest isolation level increases performance and the highest isolation level increases data consistency so it depends according to the system requirements.

Concurrency Problems in DBMS

While executing transactions concurrently several concurrency problems can occur regarding isolation level.

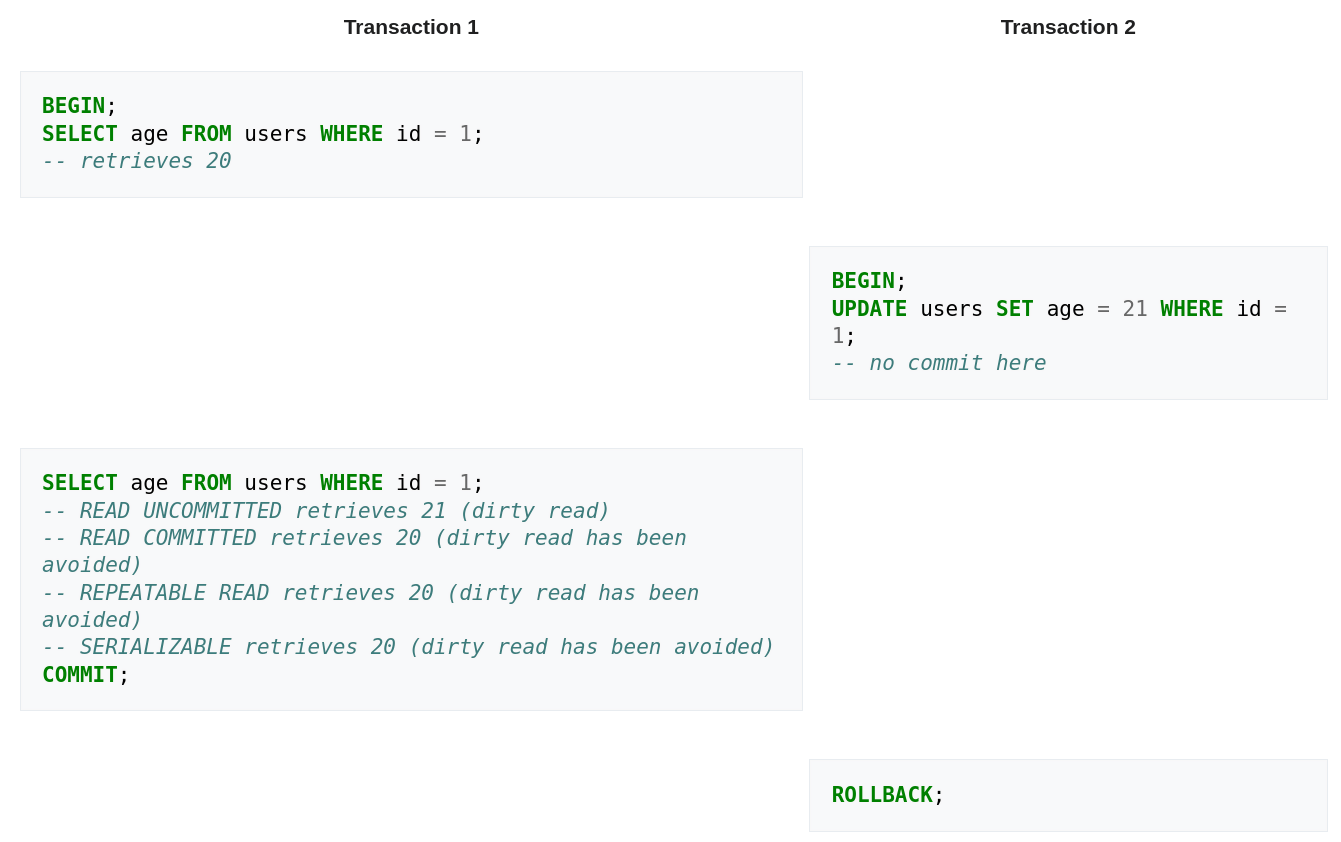

Dirty Reads

It occurs if the transaction takes a row and the other transaction updates the same row without committing.

In the example below:

- Transaction 1 reads the

agevalue as 20. - Transaction 2 updated the

agevalue to 21. - When Transaction 1 reads the

agevalue again.- If the isolation level is the lowest one read-uncommitted it takes updated value 21. (if the other transaction rollbacks its change our value of 20 is invalid)

- Otherwise, it takes 20

Image from Wikipedia

Image from Wikipedia

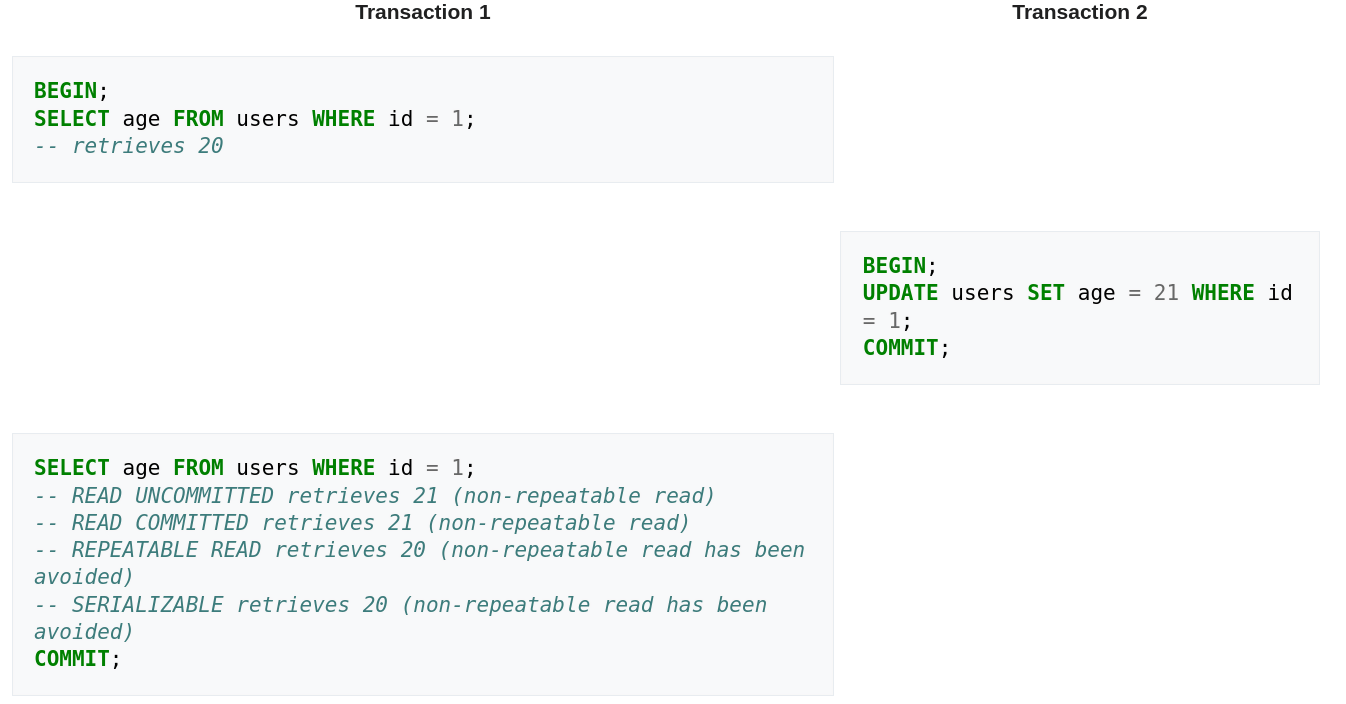

Non-repeatable Reads

It occurs when the transaction queries a row twice and between these queries another transaction updates a row and commits.

In the example below:

- Transaction 1 reads

agevalue as 20. - Transaction 2 updates

agevalue to 20 and commits. - Transaction 1 reads

agevalue again.- If the isolation level is read-committed or lower it reads 21

- Otherwise, it reads 20.

Image from Wikipedia

Image from Wikipedia

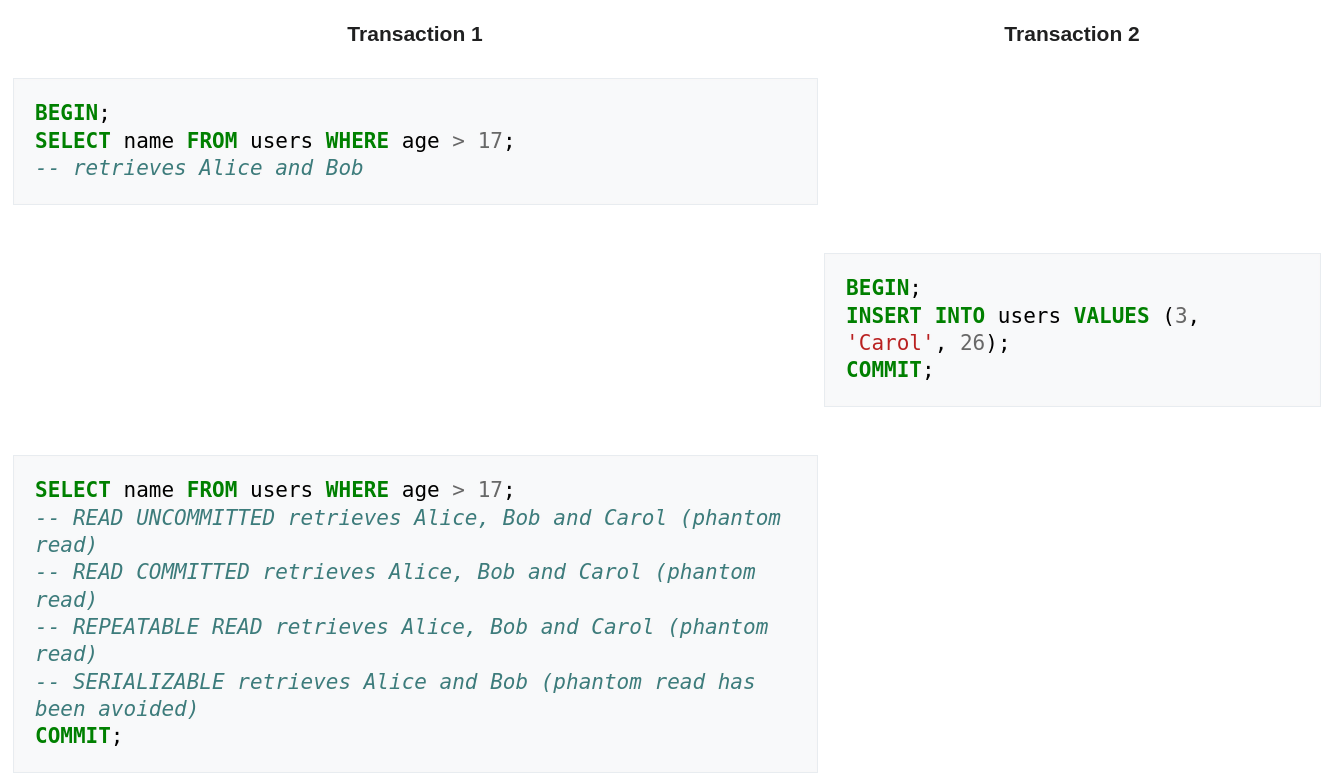

Phantom Reads

If the transaction takes single or multiple rows twice and between these another transaction adds new rows or deletes existing ones and commits. For prevention, range locks are required.

In the example below:

- Transaction 1 reads rows whose

agevalue is higher than 17. - Transaction 2 appends a row with

agevalue 26 - Transaction 1 reads rows again whose

agevalue is higher than 17.- If the isolation level is lower than the serializable, the newly added row (Carol) is included in the query.

- Otherwise not.

Image from Wikipedia

Image from Wikipedia

Comparison Table

Thanks for reading…

Summary

- Transactions are Unit of Work executed in the database.

- Transactions should be ACID:

- Atomicity: Transactions can only succeed or fail as a whole.

- Consistency: Transactions should be consistent across database constraints.

- Isolation: Two or more transactions should not affect each other.

- Durability: Transactions should be permanent when they are committed.

- We can use

COMMIT,ROLLBACK,SAVEPOINT,SET TRANSACTIONstatements in SQL for working with transactions. - There are two concurrency control protocols in DBMS:

- Lock Based: Transactions should acquire the lock for changing or reading data.

- Timestamp Based: Coordination of transactions is handled with timestamps.

- In the DBMS, several isolation levels exist.

- Read Uncommitted: The lowest one.

- Read Committed: Default.

- Repeatable Read: Range locks are not used. Phantom-Read may occur.

- Serializable: The highest one.

- While executing transactions concurrently several concurrency problems can occur:

- Dirty Reads: If other transaction updates data and does not commit.

- Non-repeatable reads: If other transaction updates data and commits.

- Phantom reads: If other transaction inserts new data, or deletes existing one and commits.

References

- Stack Overflow — What is The Best Transaction Isolation Level

- Stack Overflow — How to Set Transaction Iso. Level

- Isolation — Wikipedia

- Wikipedia — ACID

- StackOverflow — When Should I Use Transaction in My Queries

- StackOverflow — When Should I Use MySQL Transactions

- ACID — BMC Blogs

- How to Use MySQL Transactions

- SQL Transactions — TutorialsPoint

- Geek For Geeks — Concurrency Control